Learn about the past and present of autonomous driving

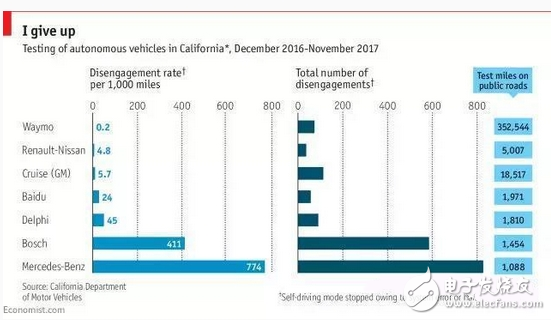

Self-driving cars perceive the world through sensors such as cameras, radar and lidar. Like radar, lidar, use invisible light pulses to draw high-precision 3D maps of the surrounding area. The camera, radar and lidar are complementary. The camera is cheap, you can see road markings, but you can't measure distance; radar can measure distance and speed, but can't capture details; Lidar provides good details, but it is expensive, and it is easy to move snow and other terrain Confused. The modern car era started with competition. In the early 1890s, there was a strong interest in the emerging carriageless technology. At that time, it was expected to combine the speed of trains, the flexibility of carriages and the convenience of bicycles. The Le Petit Journal newspaper in France organized a competition with its strong publicity influence to compare which of steam, electricity or gasoline is the best driving force. The newspaper invited participants to drive from Paris to Rouen 79 miles away. The rating is not based on the speed of their vehicles, but on whether they are safe, easy to use, and commercially valuable. This competition was held in July 1894, a total of 21 newly-invented cars departed from Paris, attracting many onlookers. In the end, only 17 cars completed the race. On the way, seven dogs were knocked down and one cyclist was injured. In the end, it was an inventor named Gottlieb Daimler (Gottlieb Daimler) who won the grand prize. * Daimler In this competition, he sent a total of 9 cars driven by the internal combustion engine he invented, of which 4 won the first prize at the same time. The referee announced that Daimler provided the vehicle with "internal power converted from oil or gasoline fuel", and this car began to have a French name-"Automobile" (automobile). Daimler's victory established the dominance of automobiles in the 20th century, and the term automobile soon penetrated into English and other languages. Interestingly, the era of modern self-driving cars also began with competitions. In March 2004, DARPA, a major US military research organization, organized a competition in the Mojave desert, requiring unmanned vehicles to drive 150 miles of off-road routes. A total of 21 teams were qualified for the competition, but after the pre-match evaluation and accidents, only 12 vehicles participated. Due to problems such as mechanical failure and falling into the sand pit, all participating teams did not complete the race. Carnegie Mellon University's "Sandstorm" ran out of its best results and traveled 7.4 miles before getting stuck. When it tried to save itself, unfortunately the front wheel caught fire. It seems that DARPA sets a high standard. In October 2005, when they held another game, 5 of the 23 participating teams completed the 132-mile distance. Of the remaining, only one team failed to complete the 7.4-mile record a year ago. A team led by Sebastian Thrun of Stanford University won the first place, and Carnegie Mellon University's "Sand Storm" ranked second. * Sandstorm In just 18 months, autonomous driving has changed from impossible to possible. In the third DARPA competition in November 2007, participating vehicles must complete tasks in a simulated urban environment, dealing with road signs, traffic signals, and other vehicles. Of the 11 teams, 6 have completed this more complex challenge. This rapid development has led Google to establish a self-driving car project led by Thrun in 2009. Since then, DARPA contest participants have started research on autonomous driving technology in Google, Uber, Tesla, and many startups. In 2012, self-driving prototypes began to enter public roads in the United States. They have traveled millions of miles and become safer and more reliable. But this technology is still far from large-scale deployment. A truly fully self-driving car must solve three independent tasks: perception (understanding what is happening in the surrounding world), prediction (determining what will happen next) and driving strategy (taking appropriate action). Thrun said that the last task is the simplest. Only 10% of the problems in autonomous driving are related to it, and perception and prediction are the more difficult parts. Self-driving cars perceive the world through sensors such as cameras, radar and lidar. Like radar, lidar, use invisible light pulses to draw high-precision 3D maps of the surrounding area. The camera, radar and lidar are complementary. The camera is cheap, you can see road markings, but you can't measure distance; radar can measure distance and speed, but can't capture details; Lidar provides good details, but it is expensive, and it is easy to move snow and other terrain Confused. Most people engaged in autonomous driving believe that it is necessary to combine several sensors to ensure safety and reliability. (However, Tesla is an obvious exception: it wants to achieve fully autonomous driving without using lidar). At present, high-end lidar systems cost tens of thousands of dollars, and startups are developing new solid-state lidars, which are expected to eventually reduce the price of lidar to several hundred dollars. After combining the data from the sensors, the car needs to recognize the surrounding objects: other vehicles, pedestrians, cyclists, road markings, road signs, etc. In terms of recognition, humans are much better than machines. Machines must be trained with a large number of carefully labeled samples before they can have this ability. One way to obtain these samples is to hire someone to manually tag the images. Seattle-based Mighty AI has an online community of 300,000 people who mark street view images for many automotive corporate customers. "We want people in the car to be judged," said Daryn Nakhuda, owner of Mighty AI, "so we need the help of human expertise." Some images from video games, such as those in Grand Theft Auto, are very close to the real street scene and can also help. Because the game software knows everything, it can accurately mark these scenes, so these images can also be used for training. Thrun said that the most difficult to identify are those rare things, such as garbage on the road or plastic bags blown up on the highway. He recalled the early days of Google ’s self-driving project, “our perception module could not distinguish whether the object was a plastic bag or a flying child.†The puddle on the road also confused the recognition system. However, combining data from multiple sensors can tell whether an object on the road is a hard obstacle. The data obtained by the sensor can also be compared with the sensor data collected by other vehicles previously traveling on the same road. This process of mutual communication is called "fleet learning". The pioneers of autonomous driving have accumulated a lot of data, which gives them a certain advantage, but some startups are also making and selling ready-made high-precision maps for autonomous vehicles. Once a car recognizes everything around it, it needs to immediately predict what will happen in the next few seconds and decide how to respond. Road signs, traffic lights, stop lights and turn signs also provide some hints. However, there are still gaps between autonomous vehicles and human drivers in some places. Human drivers are good at handling unexpected situations, such as road construction, broken vehicles, transport trucks, emergency vehicles, fallen trees or harsh Weather etc. Snow is a special challenge: Lidar systems must be carefully adjusted to ignore falling snow, and road snow will also reduce the accuracy of high-precision maps. Although high-precision map technology is still under development, it is still helpful for certain limited areas, where detailed maps are already available and the weather is usually very good. This explains why the sunny and well-planned Phoenix can become a popular city for testing autonomous vehicles. Pittsburgh is a city that is more difficult to test because of its bad weather. Cruise, a self-driving startup acquired by General Motors, chose to test on the complicated streets of downtown San Francisco, and its performance also left a deep impression. Kyle Vogt, the founder of Cruise, believes that testing in a densely populated environment means that cars often encounter anomalies, so they can learn faster. When autonomous vehicles are confused and do not know how to respond or make wrong decisions, the safety engineer in the driver ’s seat takes over. This is called "disengagement", and the number of disengagements per thousand miles provides a rough measure for comparing companies related to autonomous driving (see chart above). However, it is best not to treat disengagement as a failure. Disengagement actually helps the autonomous driving system learn from experience and improve it. Noah Zych, head of safety at Uber's self-driving car department, said that sensor data recorded when approaching disengagement can reveal what went wrong with the car. Then you can test the same problem in the simulation and then modify the software. Zych said: "We can test again and again, change scenarios and analyze different results," the improved software will eventually be used in real cars. Even if autonomous driving is now widely deployed, they still occasionally need human help. Christophe Sapet, CEO of driverless shuttle manufacturer Navya, cites an example: On a two-lane road, a self-driving car reared an abandoned truck because it dared not cross the solid line in the road. The reason for this result is that the self-driving car is programmed and set to comply with traffic rules (and not to adapt randomly). But if it is human, as long as there is no car on the opposite side, it will press the solid line to bypass the two trucks. Navya's self-driving vehicles will turn to the remote monitoring center for help, and human operators can see the real-time signal captured by the vehicle's camera. When faced with the situation just mentioned, the operator will not directly control it together remotely, but will allow the car to drive across the solid line while ensuring safety. Thrun predicts that these operators may monitor thousands of autonomous vehicles at a time in the future. At the same time, low-profile versions of autonomous driving are gradually being added to existing cars. The quantitative table developed by the American Society of Automotive Engineers divided the level of autonomous driving into 5 levels. Level 1 automatic driving includes basic assistance (such as cruise control). Level 2 has added features such as lane keeping to enable cars to drive on highways, but still requires drivers to pay attention. Audi ’s A8, launched this year, is the first model to reach Level 3. It can automatically drive and monitor the surrounding environment, but when the system requires it, the driver must take over in time. * Audi A8 Waymo, Uber and their companies tried to jump directly to Level 4 level, that is, under certain conditions, such as in certain areas of the city, the vehicle can be fully autonomous. Some industry insiders believe that Level 2 and Level 3 incomplete automatic driving is unsafe, because even if the system controls the vehicle, the driver still needs to pay attention at all times, and it is difficult for drivers to do this. In May 2016, a Tesla Model S crashed into a truck and the driver was killed in the accident. Investigators found that despite the warning issued by the Autopilot system, the driver still failed to pay attention to the road conditions. Tesla's Autopilot belongs to Level 2 level. One problem facing self-driving cars is that roads are built for human drivers, and self-driving cars must share roads with them. People communicate through lights and other non-verbal prompts, which vary from place to place. Amnon Shashua, CTO of Mobileye, a manufacturer of self-driving technology, believes that self-driving vehicles may eventually be able to adapt to the surrounding environment. For example, driving in Boston is more intense than in California. Chris Urmson, CEO of self-driving car company Aurora, said: "We have to make cars run in a world like today." But in the future, things may become easier. In the future, there may be roads or areas dedicated to autonomous vehicles, as well as special equipment to support them, namely V2I (Vehicle to Infrastructure) technology. In some areas where self-driving cars are already running, some changes have been made to traffic lights. In the future, V2I and V2V (vehicle-to-vehicle) technologies can help autonomous vehicles better coordinate with each other. The public seems to be mainly concerned about two potential risks associated with autonomous vehicles: the first is how they should face moral dilemmas. For example, choose between hitting a group of children and hitting another car. Many people in the industry believe that these problems do not reflect the real world. The second concern is cyber attacks. The self-driving car is essentially a computer installed on the car, which may be hijacked and destroyed remotely. However, engineers in the autonomous driving industry insist that they attach great importance to network security. The multiple redundant sensors and control systems they have built can technically provide certain security guarantees. If any part of an autonomous vehicle starts to behave abnormally, the car will stop for whatever reason. Sapet joked: "It's easier to use ordinary cars to kill people than driverless cars." Self-driving vehicles will soon enter our lives, at least in good weather and orderly environment. "Once you find the key to the problem, it can gradually be completely resolved." Urmson said. From impossible to possible, from possible to real life, although the public still has concerns, the development speed of autonomous driving can be said to be faster and faster.

Speakers are one of the most common output devices used with computer systems. Some speakers are designed to work

specifically with computers, while others can be hooked up to any type

of sound system. Regardless of their design, the purpose of speakers is

to produce audio output that can be heard by the listener.

Speakers are transducers that convert electromagnetic waves into sound waves. The speakers receive audio input from a device such as a computer or an audio receiver. This input may be either in analog or digital form. Analog speakers simply amplify the analog electromagnetic waves

into sound waves. Since sound waves are produced in analog form,

digital speakers must first convert the digital input to an analog

signal, then generate the sound waves.

The sound produced by speakers is defined by frequency and amplitude.

The frequency determines how high or low the pitch of the sound is. For

example, a soprano singer's voice produces high frequency sound waves,

while a bass guitar or kick drum generates sounds in the low frequency

range. A speaker system's ability to accurately reproduce sound

frequencies is a good indicator of how clear the audio will be. Many

speakers include multiple speaker cones for different frequency ranges,

which helps produce more accurate sounds for each range. Two-way

speakers typically have a tweeter and a mid-range speaker, while

three-way speakers have a tweeter, mid-range speaker, and subwoofer.

Speaker System,Magnet Speaker Acoustic,Moving Coil Loudspeaker,Metal Frame Mylar Speaker Jiangsu Huawha Electronices Co.,Ltd , https://www.hnbuzzer.com